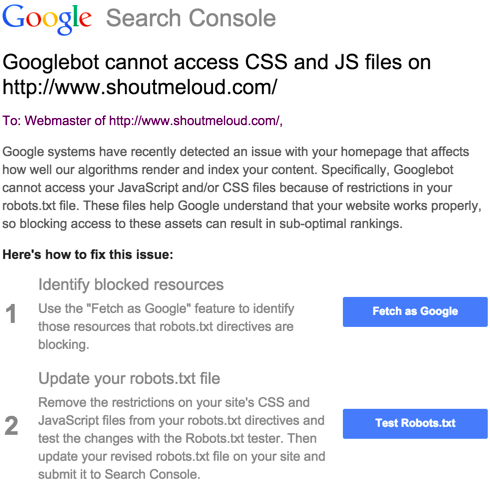

Ultimate Guide to Resolving ‘Googlebot Cannot Access CSS and JS Files’ Error in WordPress

If you’re stumbling upon Googlebot blocked by robots.txt in WordPress, then you’ve come to the right place.

Whether you’re a WordPress developer or total beginner, we have all had our run-ins with Googlebot errors. These can be annoying because they affect your site’s visibility on Google search.

This article outlines how to resolve the ‘Googlebot cannot access CSS and JS files’ error for WordPress sites.

Let’s get started!

Why Does Googlebot Need Access to CSS and JS Files?

The best way to answer a question is always to understand the answer.

Google prefers to rank sites that are user-friendly: websites that load fast, offer good user experiences, etc. In order to determine a website’s user experience, Google needs access to the site’s CSS and JavaScript files.

The default WordPress settings do not prevent search bots from accessing any CSS or JS files. However, some site owners may block search bots after adding extra security measures, such as a security plugin.

What’s Up with WordPress Robots.txt?

Before diving into the solutions, let’s understand what the robots.txt file does.

This file acts like a traffic cop for Googlebot and other search engines, telling them what to crawl and what not to crawl.

By default, WordPress generates a virtual robots.txt file, that works just fine. However, sometimes security plugins can add lines like Disallow: /wp-includes/, leading to this error.

The robots.txt file in WordPress resides in your site’s root directory, and you can access it through FTP or your hosting control panel.

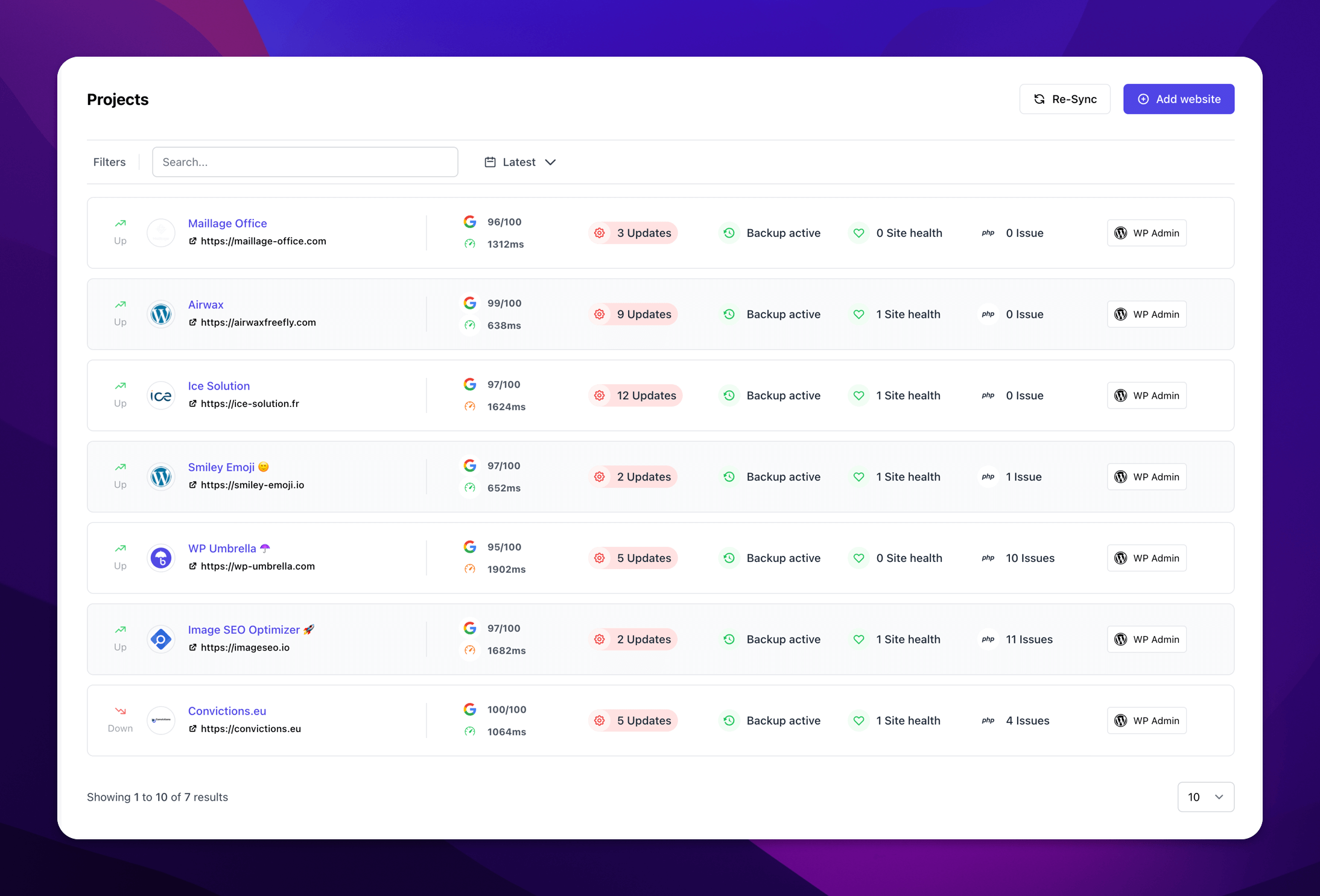

Ready to boost your productivity, impress your clients and grow your WordPress agency?

Install WP Umbrella on your websites in a minute and discover a new way to manage multiple WordPress sites.

Get Started for free

Other Common WordPress Errors to Look Out For

While you’re at it, you may also want to check for other common WordPress errors, like the “Missing a Temporary Folder” error. We’ve got a full guide on that too!

Troubleshooting: Fixing the “Googlebot Cannot Access CSS and JS Files” Error in WordPress

Option 1: Manually Editing WordPress Robots.txt File

How to Edit robots.txt for Googlebot:

Your robots.txt file should include a sitemap and a few other items if you want to fix the Googlebot cannot access CSS and JS files error.

WordPress Robots.txt file is easy to understand.

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /wp-content/plugins/

Disallow: /wp-content/themes/

Allow: /wp-includes/js/Disallow: /folder means that the folder won’t be crawled by Googlebot.

Allow: /folder means that the folder will be crawled by Googlebot.

To fix the “Googlebot cannot access CSS and JS files” Error in WordPress, you need to allow Googlebot to crawl the folder where your CSS and JS files are stored.

Most of the time, removing the following line will fix the issue: Disallow: /wp-includes/

Afterward, save your robots.txt file and upload it. Then click “fetch and render” in the Google tool. Now compare the fetch results, and you should see that many blocked resources will no longer be blocked.

Tips

If you find your robots.txt file empty or nonexistent, don’t worry! Googlebot automatically crawls and indexes all files when this happens.

Option 2: Use a Plugin to Edit Robots.txt File

You can also edit your robots.txt file with Yoast, SEOPress, or Rankmath. Each plugin has a simple interface that allows you to remove the line blocking Googlebot.

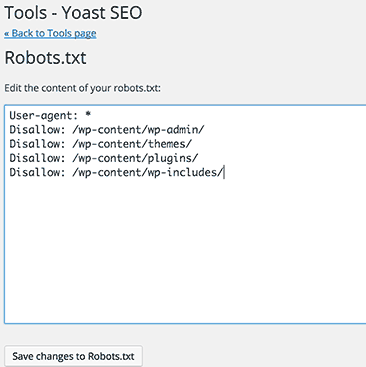

Edit your robots.txt file with Yoast

It is a lot easier to make or edit a robots.txt file using Yoast SEO. For this, follow the steps below.

Step 1: Go to your WordPress website and log in.

Once logged in, your account will appear in your ‘Dashboard’.

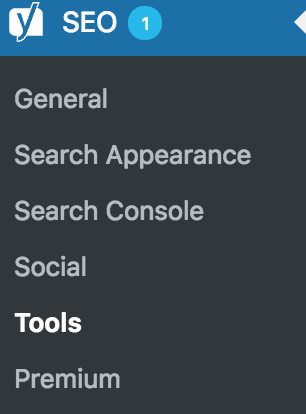

Step 2: Click on the ‘SEO’ tab.

There is a search bar on the left-hand side of the screen. Select ‘SEO’ from that menu.

Step 3: Then, click on ‘Tools’.

You will find additional options in SEO settings. Click on ‘Tools’.

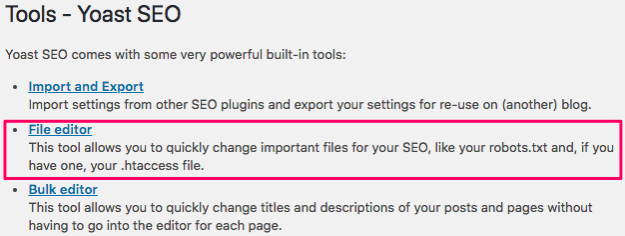

Step 4: Click on ‘File Editor’.

And that’s it, you just have to remove the line “Disallow: /wp-includes/” and click on “save changes to robots.txt”.

Edit your robots.txt file with SEOPress

Step 1: Go to the SEO tab.

Step 2: Check the green radio button associated with Robots.

Step 3: Enable the robots.txt virtual file by clicking Manage.

Step 4: Write your robots.txt file in Virtual Robots.txt and save.

Make sure to remove the line “Disallow: /wp-includes/”

If you want to see your robots.txt file, click View your robots.txt or go to ‘yoursite.com/robots.txt’

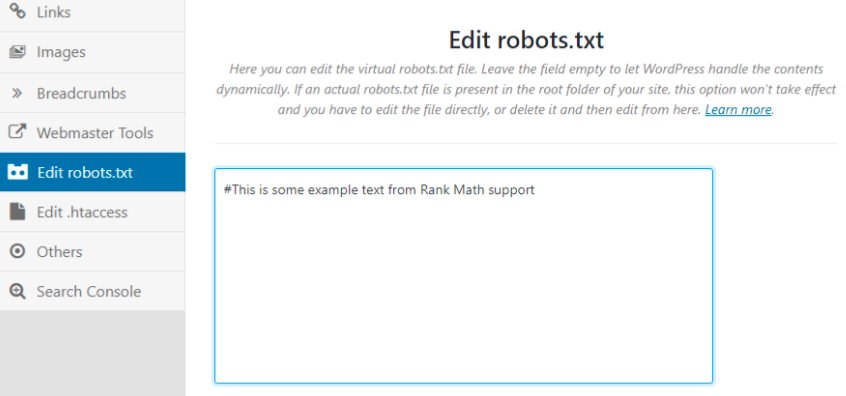

Edit robots.txt with Rankmath

On Rankmath, the robots.txt file is editable as shown below:

Once again, remove the line “Disallow: /wp-includes/“

And that’s it!

What if I Want to Enable JavaScript in WordPress?

If you’re looking to enable JavaScript in WordPress, it’s usually not blocked by the robots.txt file, but you might need to delve into your theme settings or use a plugin to efficiently add scripts.

Wrapping It Up: Googlebot Issues & SEO optimization

You’re now well-equipped to tackle Googlebot issues like a pro! Whether you decided to manually edit your robots.txt or use a plugin like Yoast SEO, SEOPress, or Rankmath, you’ve taken a critical step in optimizing your WordPress site for search engines.

Keep in mind that it may take a little time for Google to re-crawl your site and reflect the changes, so don’t fret if you don’t see immediate results.

FAQs on Googlebot and WordPress

This usually happens due to specific lines added to the robots.txt file that disallow Googlebot from crawling certain parts of your site.

Yes, the alert is a reliable indicator that Googlebot encountered problems while trying to crawl your site.

It varies but generally takes a few days to a few weeks for Google to re-crawl your site and update its index.